Facebook revamps its takedown guidelines

Facebook is providing the public with more information about what material is banned on the social network.

Its revamped community standards now include a separate section on "dangerous organisations" and give more details about what types of nudity it allows to be posted.

The US firm said it hoped the new guidelines would provide "clarity".

One of its safety advisers praised the move but said that it was "frustrating" other steps had not been taken.

Facebook says about 1.4 billion people use its service at least once a month.

Confused users

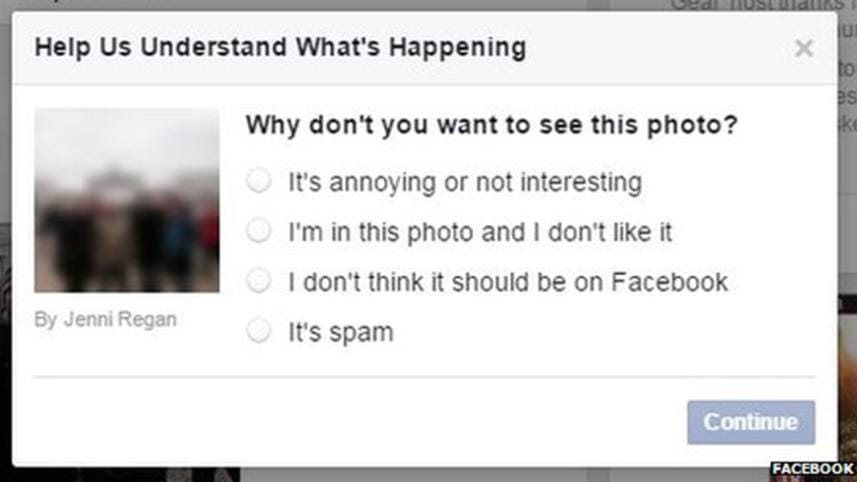

The new guide will replace the old one on the firm's website and will be sent to users who complain about others' posts.

[twitter]

Facebook revamps its takedown guidelines http://t.co/FACsT4yz5m pic.twitter.com/I5wzd6Tjjo

— BBC News (World) (@BBCWorld) March 16, 2015[/twitter]

Monika Bicket, Facebook's global head of content policy, said the rewrite was intended to address confusion about why some takedown requests were rejected.

"We [would] send them a message saying we're not removing it because it doesn't violate our standards, and they would write in and say I'm confused about this, so we would certainly hear that kind of feedback," she told the BBC.

"And people had questions about what we meant when we said we don't allow bullying, or exactly what our policy was on terrorism.

"[For example] we now make clear that not only do we not allow terrorist organisations or their members within the Facebook community, but we also don't permit praise or support for terror groups or their acts or their leaders, which wasn't something that was detailed before."

Bicket stressed, however, that the policies themselves had not changed.

Buttocks ban

The new version of the guidelines runs to nearly 2,500 words, nearly three times as long as before.

The section on nudity, in particular, is much more detailed than the vague talk of "limitations" that featured previously.

Facebook now states that images "focusing in on fully exposed buttocks" are banned, as are "images of female breasts if they include the nipple".

It adds that the restrictions extend to digitally-created content, unless posts are for educational or satirical purposes. Likewise, text-based descriptions of sexual acts that contain "vivid detail" are forbidden.

However, Facebook adds that it will "always allow photos of women actively engaged in breastfeeding or showing breasts with post-mastectomy scarring".

Other sections with new details include:

- Bullying - images altered to "degrade" an individual and videos of physical bullying posted to shame the victim are now expressly forbidden

- Hate speech - while the site maintains the same list of banned topics, it now adds that people are allowed to share examples of others' hate speech in order to raise awareness of the issue, but they must "clearly indicate" that this is their purpose

- Criminal activity - the network now states that users are prohibited from celebrating any crimes they have committed, but adds that they are allowed to propose the legality of illegal activities

- Self-injury - the site says that it will remove content that identifies victims and targets them for attack, even if done humorously. But it says that it does not consider "body modification" to be a type of self-injury

Graphic violence

The changes have been welcomed by the Family Online Safety Institute (Fosi), one of five independent organisations that make up Facebook's safety advisory board.

"I think it's great that Facebook has revamped its community standards page to make it both more readable and accessible," the body's chief executive Stephen Balkam told the BBC.

"I wish more social media sites and apps would follow suit."

But he expressed concern that Facebook was still not doing enough to protect youngsters from seeing disturbing videos.

While Facebook's new guidelines state that users should "warn their audience about what they are about to see if it includes graphic violence", it provides no way for members to add cover pages to clips to prevent them from auto-playing.

In January, after months of pressure from Fosi and others, Facebook revealed it had introduced a way for its own staff to add such "interstitial" warnings. They have been used over clips showing the murder of a French policeman in the Charlie Hebdo attacks among other material.

However, Facebook only adds the alerts if it has received a complaint, rather than letting the original posters do so.

"It is frustrating that after all this time, Facebook users are still not able to put up interstitials on violent or controversial images and videos," said Balkam.

"Facebook has done the right thing to place interstitials themselves once a user has reported an image or extreme content, but my hope is that they will bring this to ordinary users sooner rather than later."

Facebook has acknowledged the point.

"We are always looking to provide more tools for people to use themselves," responded Bicket.

"Right now we are not in a position to provide those tools to people, but we are always looking at ways to do better."

For all latest news, follow The Daily Star's Google News channel.

For all latest news, follow The Daily Star's Google News channel.

Comments