What threats does ChatGPT pose to academia?

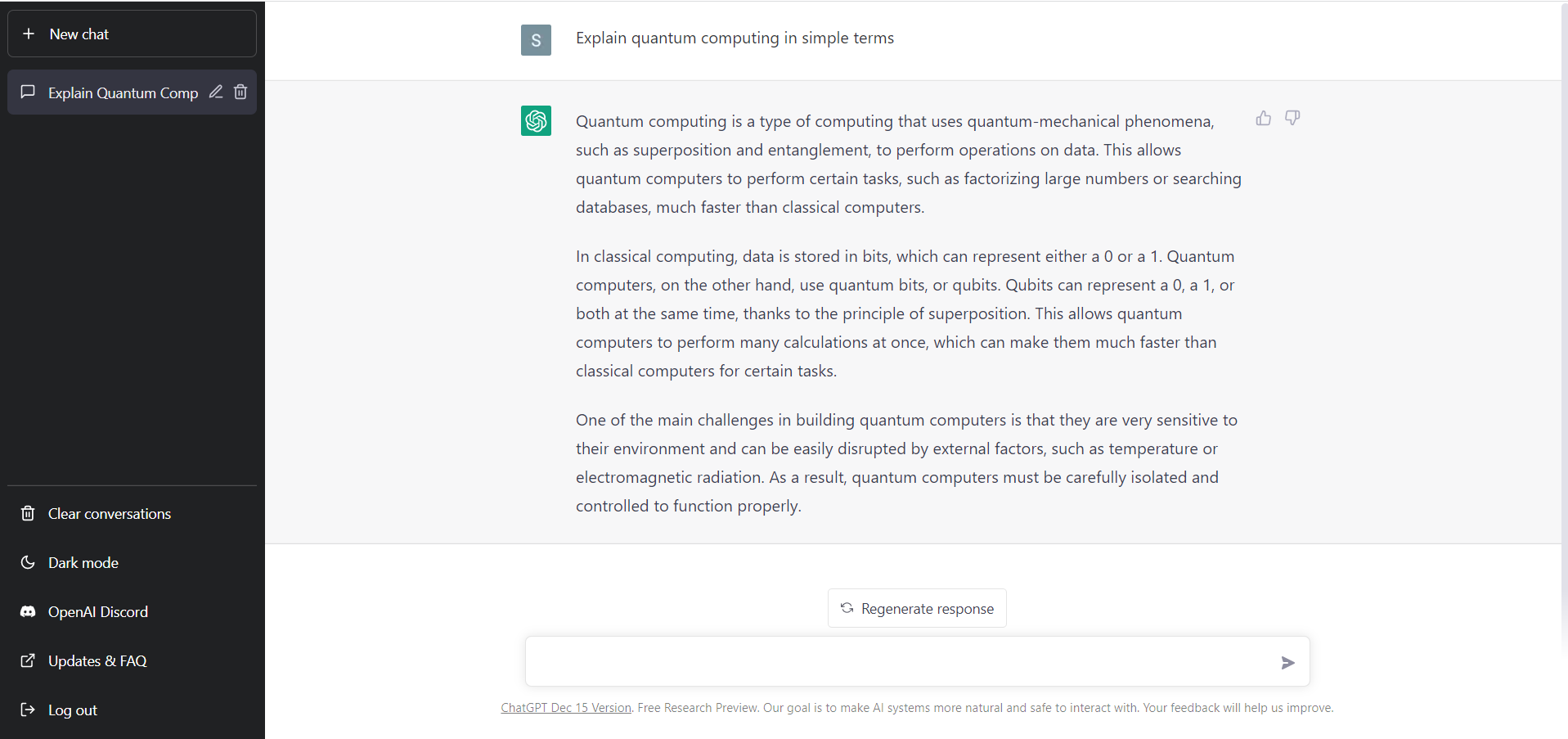

US-based artificial intelligence company OpenAI's Generative Pre-trained Transformer 3 (GPT-3) technology, including ChatGPT, has brought about a revolution in the field of AI with its advanced language processing capabilities. Its widespread availability and accessibility bring both benefits and risks. There is potential of misuse, and educators and researchers must be aware of the risks associated with this technology.

One of the ways in which students may misuse the GPT-3 technology is by using it to complete their assignments without actually understanding the concepts or the problem-solving strategies involved. This can lead to a dependency on technology and a lack of critical thinking and problem-solving skills. Developing these skills is essential for success in both education and professional career, and their absence can have a significant impact on a student's future prospects.

Another way in which students may abuse GPT-3 is by using it to plagiarise their assignments. Its advanced language processing capabilities make it possible to generate high-quality text, which can be tempting for students to copy and paste into their own work. This undermines the educational process and devalues the efforts of those students who complete their work independently. Plagiarism is a serious offence and can result in academic dishonesty charges, which can have a lasting impact on a student's future.

Researchers may misuse the GPT-3 technology by using it to manipulate or fabricate data, which can compromise the integrity of research and lead to significant consequences for both the individual researcher and the wider scientific and research community.

In addition, GPT-3 has the potential to perpetuate biases and harmful stereotypes. The technology is trained on a large amount of text data, which includes both explicit and implicit biases. If these biases are not addressed, they can be amplified and perpetuated through the technology's outputs. This can have serious consequences for marginalised groups and undermine the efforts to address societal inequalities.

How do we minimise the risks and maximise the benefits, then?

Our education system must integrate ethical and responsible use of these tools into the curricula, monitoring its impact on student learning and development. Strict ethical standards for research must be adopted, addressing biases and harmful outcomes in the technology's outputs, addressing the potential for malicious use and defining ways to prevent such.

Schools, colleges and universities must integrate new technology usage ethics into their curricula, and online assignment and exam systems, violations of which should be dealt with justified penalties. It's important to educate both the teachers and students about the danger of plagiarism and the consequences of academic dishonesty. Teachers need to provide students with new guidelines and scopes on AI-based text creation, critically evaluate them and avoid conflicts with independent thinking and problem-solving skills.

To restrict the misuse of GPT-3 technology by researchers, strict ethical standards and guidelines have to be installed to ensure adherence to ethical data collection and analysis, as well as prohibition of data manipulation or fabrication. Researchers should also be required to engage in even deeper and heavily engaged peer review and collaboration to ensure that their work is transparent, accurate, and reliable.

On the flip side, language models such as ChatGPT can help improve students' writing and communication skills, as long as it is used as a tool to support learning, rather than a replacement for it.

Bangladeshi school exams are mostly in written form, but there is a growing demand for introducing oral exams and verifying presentation skills comprehensively. In Bangladeshi universities, plagiarism is still a massive problem. In this circumstance, preventing plagiarism through an AI tool requires new, improved measures.

First, the University Grants Commission (UGC) as well as all the education boards must make the use of plagiarism software mandatory, Second, there must be new measures to prevent copy-pasting texts from AI-generated content. Thesis and assignments should be defended through new mechanisms to evaluate the students' understanding of the subjects as well as their critical-thinking capabilities.

Monitoring ChatGPT's influence on learning and research, and addressing research biases and other harmful aspects of the technology's outputs should be taken as a serious concern. The curricula has to be orchestrated and developed in a new way in the age of open source AI.

The UGC can create a dedicated national consortium body with subject matter experts from all fields of education Bangladeshi educational institutions. This body can ensure that none of the undergraduate, graduate and post-graduate research topics is partially duplicated and mirrored at the national level. The body can also monitor and nurture the quality of Bangladeshi thesis, journals and research papers.

Bangladeshi researchers are scoring extremely poor in the Scopus peer review database. According to the world's largest peer-reviewed database, in 2021, Bangladeshi researchers published only 11,477 papers against 222,849 from India and 35,663 from Pakistan. Bangladesh scores very poorly in other databases too. In the 2020 Scimago review database, Bangladesh had not a single Q1 and Q2 research paper.

In the Global Knowledge Index 2021, Bangladesh ranked 120th out of 154 countries. Bangladesh ranks 102nd among the 132 economies featured in the Global Innovation Index 2022. In the age of open source AI like ChatGPT, the research and innovation qualities of Bangladeshi students and researchers may get worse, so proper steps and actions must be taken now.

Disclaimer: This writer used chatGPT to prepare this piece.

Faiz Ahmad Taiyeb is a Bangladeshi columnist and writer living in the Netherlands. Among other titles, he has authored 'Fourth Industrial Revolution and Bangladesh' and '50 Years of Bangladesh Economy.'

For all latest news, follow The Daily Star's Google News channel.

For all latest news, follow The Daily Star's Google News channel.

Comments