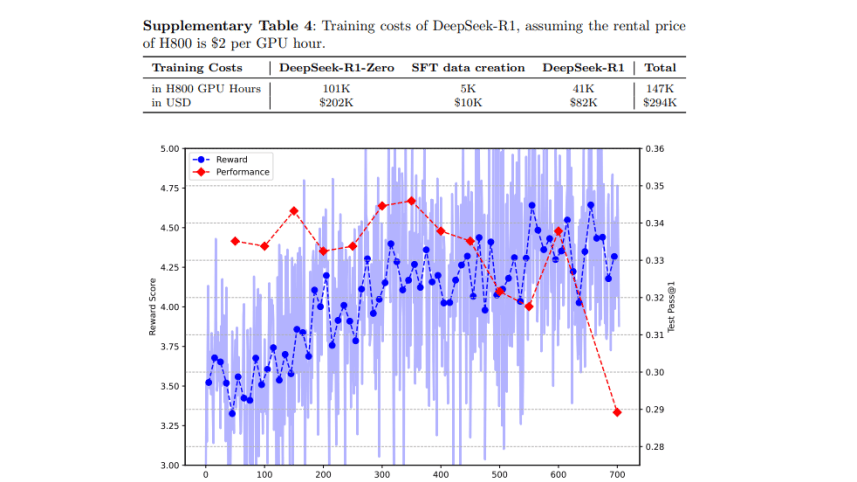

DeepSeek spent $294,000 to train its popular AI model

DeepSeek, the Chinese artificial intelligence (AI) developer, has recently disclosed that training its flagship R1 model cost $294,000, a figure far lower than the amounts often associated with the development of other AI models.

The details, published on September 17,2025 in Nature, the scientific journal, mark the first time the Hangzhou-based company has put a number on its training expenses.

The paper, co-authored by founder Liang Wenfeng, acknowledged that DeepSeek has used Nvidia's A100 chips in early development stages. Following the initial phase, DeepSeek-R1 underwent an 80-hour training run on a cluster of 512 Nvidia H800 chips.

Training costs refer to the large sums required to run powerful chips over extended periods to process vast amounts of text and code.

U.S. export restrictions have barred shipments of A100 and the more powerful H100 chips to China since October 2022, leaving the H800 as the processor legally available in the Chinese market.

Sam Altman, CEO of OpenAI, said in 2023 that his company's foundational models had cost "much more" than $100 million to train, though no exact figures have been released.

DeepSeek first gained global attention in January after unveiling what it claimed were lower-cost systems, sparking market fears that cheaper Chinese models might undermine the dominance of established AI players such as Nvidia. Since then, the company has kept a low public profile, offering only limited product updates.

Earlier, many AI researchers have suggested the company may have relied on "distillation" – a process in which one AI system learns from another – to lower its costs. DeepSeek has defended the approach, saying it allows for broader access to advanced technology by making models cheaper to train and less energy-intensive.

However, in January, DeepSeek said it had used Meta's open-source Llama model in some versions of its systems.

The Nature article added that training data for DeepSeek's V3 model included web pages containing a "significant number" of responses generated by OpenAI's systems, which may have indirectly shaped the model's capabilities. The company said this was an incidental outcome rather than a deliberate strategy.

For all latest news, follow The Daily Star's Google News channel.

For all latest news, follow The Daily Star's Google News channel.

Comments