As deepfakes blur reality, voters must learn to doubt what they see

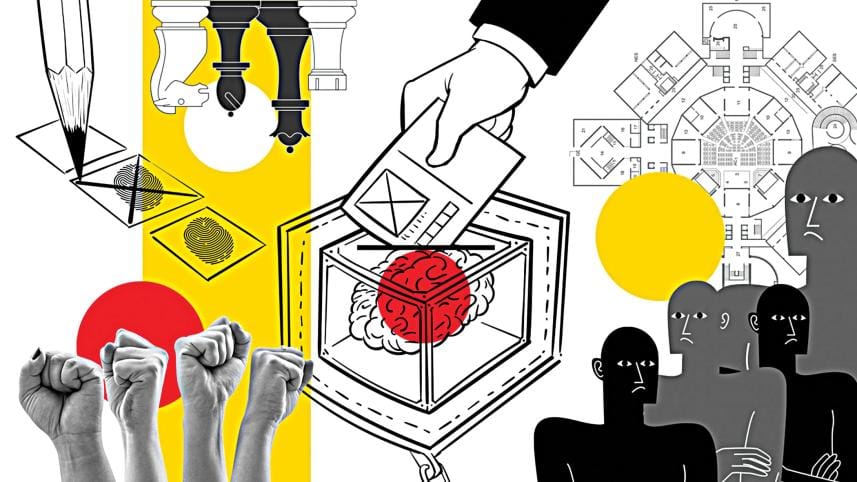

The most significant threat to democracy today no longer comes from ballot-box manipulation or overt displays of brute force. It enters quietly, almost invisibly, through the screen of a smartphone—an AI-generated video so realistic that it can upend voter perceptions within seconds. The signs are already there as Bangladesh's upcoming parliamentary election risks becoming a warning sign for others, demonstrating how generative artificial intelligence, particularly deepfake technology, can be weaponised to distort public opinion, undermine political opponents, and create widespread confusion at a pace that overwhelms traditional safeguards.

During the last election cycle, the country witnessed a relatively small but calculated deployment of deepfakes. Videos surfaced showing opposition leaders "saying" things they had never said, their faces manipulated to deliver extreme positions designed to erode public trust. AI-generated news anchors appeared to broadcast fabricated propaganda. Perhaps most dangerously, on the eve of the polls, sudden false announcements circulated claiming that specific candidates had withdrawn from the race. These were not accidental errors or harmless rumours; they were deliberate, coordinated attacks intended to make voters doubt what they see and hear. These threats have since multiplied. In the new reality, the old phrase "seeing is believing" no longer holds. It must now evolve into "seeing is suspecting."

In the absence of reliable large-scale detection technology, the responsibility for identifying deepfakes now falls heavily on individual citizens. The speed and volume of social media circulation have rendered automated systems insufficient. Every voter must therefore rely on a combination of intuition, careful observation, and basic digital forensic awareness. Deepfakes often reveal themselves through tiny imperfections of human expression, details that advanced algorithms still struggle to replicate. But by closely observing how light reflects on a face, how shadows form, or whether skin tones across the face, neck, and hands align naturally, viewers can detect subtle anomalies.

Another significant giveaway lies in the mismatch between lip movements and voice. In several manipulated videos, it has been observed that mouth movements failed to fully align with the spoken words. At times, the speech rhythm felt unnaturally smooth, lacking the pauses, breaths, and imperfect cadences characteristic of human speech. These are clear indicators of an artificially generated voice track layered over a synthetic video.

Deepfake detection, however, is not limited to technical cues alone; contextual behaviour provides equally powerful signals. If a video portrays a political leader delivering a message that sharply contradicts their known stance, history, or temperament, it should immediately raise suspicion. Deepfakes are deliberately crafted to provoke emotional responses, such as shock, anger, and outrage. The moment a viewer experiences an instant emotional surge is precisely when scepticism becomes most necessary. Such content is often accompanied by urgent prompts such as "share this immediately!"—a tactic designed to bypass judgment and accelerate the spread of falsehoods.

Bangladesh has not been totally blind to this emerging threat. The Election Commission (EC) has publicly acknowledged that artificial intelligence may pose a greater danger than traditional forms of electoral violence. In response, the EC has proposed establishing a centralised cell dedicated to combating AI-generated disinformation. This unit is intended to work in coordination with the Bangladesh Telecommunication Regulatory Commission (BTRC), the ICT Division, and national cybersecurity agencies to enable the rapid removal of harmful content. The EC has also recommended amendments to the Representation of the People Order (RPO) to formally regulate the misuse of AI and social media during elections.

These domestic initiatives have been reinforced through international collaboration. Under the leadership of the United Nations Development Programme (UNDP), the BALLOT Project—implemented in partnership with UN Women and Unesco—has taken shape. Unlike routine monitoring initiatives, this project prioritises building local capacity, strengthening civic awareness, and integrating global best practices to protect Bangladesh's electoral information environment. Collectively, these efforts signal that both state and international stakeholders recognise the seriousness of the digital threat and are committed to resisting it.

Yet, significant structural challenges persist. Most deepfake detection tools available globally are trained on English-language datasets, creating a substantial gap when verifying Bangla-language audio and video. As a result, viral Bangla deepfakes cannot be quickly or reliably authenticated through automated systems. Independent fact-checking organisations, such as Rumor Scanner and Dismislab, continue to face chronic shortages of funding, skilled personnel, and AI forensic capacity, limiting their ability to respond effectively to high volumes of misinformation. This challenge is compounded by a growing trust deficit in the media ecosystem in general, with many citizens now interpreting both official statements and independent fact-checks through partisan lenses, weakening counter-disinformation efforts. Digital literacy also remains uneven, particularly in rural and low-connectivity areas, where citizens are less equipped to identify subtle signs of manipulation.

To bridge these gaps, a coordinated, citizen-centric strategy is essential. When voters encounter suspicious content, they must take immediate and structured action. The first step is to report the material directly on the relevant platform—Facebook, YouTube, or TikTok—using built-in options such as "False Information" or "Impersonation," enabling moderation systems to respond promptly. The second step is to submit the content to independent fact-checkers for verification, ensuring that an authoritative and non-partisan assessment enters the public domain. The third step is to notify relevant authorities, such as local EC offices or the Bangladesh Police Cyber Crime Unit, when the content clearly violates electoral regulations or incites harm.

The struggle to protect Bangladesh's democratic process now extends far beyond physical polling booths. It is unfolding within the quiet, personal space of each citizen's mobile screen. Deepfakes are not merely pieces of misinformation; they are psychological weapons engineered to confuse, provoke, and destabilise. Our democratic resilience, therefore, relies on a combination of state-led frameworks, international cooperation, and most critically, the vigilance of ordinary voters.

To safeguard electoral legitimacy, voters must transform their screens into tools of verification rather than channels of manipulation. Democracy in the digital era will survive not through blind trust, but through informed suspicion and responsible civic engagement. Only then can democratic choice rest on truth rather than deception.

Dr SM Rezwan-Ul-Alam is associate professor of media, communication and journalism at the Department of Political Science and Sociology, North South University. He can be reached at rezwan.alam01@northsouth.edu.

Views expressed in this article are the author's own.

Follow The Daily Star Opinion on Facebook for the latest opinions, commentaries, and analyses by experts and professionals. To contribute your article or letter to The Daily Star Opinion, see our guidelines for submission.

For all latest news, follow The Daily Star's Google News channel.

For all latest news, follow The Daily Star's Google News channel.

Comments